By: William Bradford, Business Development

A recent publication by the International Journal of Public Opinion Research asserted data fabrication does not affect overall correlational results. The team used survey data from one study in Venezuela with a high volume of detected falsified interviews to conclude “enumerator fabrication may not constitute a grave threat to political science research” because “enumerators largely fabricate plausible data.”1

However, this finding goes against ORB’s 10+ years of experience managing global data collection and verification in complex and hard-to-reach environments. We have found that survey fabrication can and does skew both individual variables and larger correlations. For this reason, ORB upholds a rigorous standard of quality control to ensure the data we use in analysis is reliable and accurate.

Our Approach to Quality Control

ORB was founded on the principle that only well-sourced, reliable data can be used to create accurate and informed conclusions. Over the past 10 years, ORB has pushed itself to new heights, especially during discussions around Kuriakose and Robbins’ international data quality article in 2015. Today, ORB verifies our data sets using GPS verification, time stamps, length of surveys, and listening to audio recordings at random.

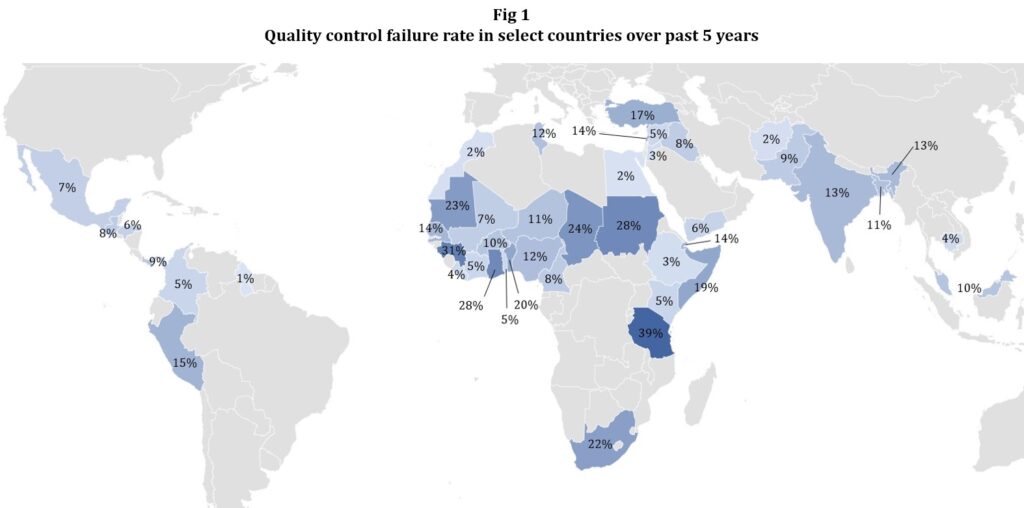

Our practices detect many failed surveys and interviews in the countries in which we work (Fig 1). This shows that fraudulent and low-quality surveys are commonplace. However, their frequency is unimportant if they do not affect the resulting correlation.

ORB conducted an internal study in 2018 to understand to what extent falsified surveys and interviews affect our data sets. Using data from many studies across nine countries in Africa, Asia, and the Middle East, we found the difference in means for each variable while controlling for the differences between countries and graphed the means based on the size of the fraudulent data’s effect. The study found that the effect of fraudulent interviews significantly skews 51% of the variables.

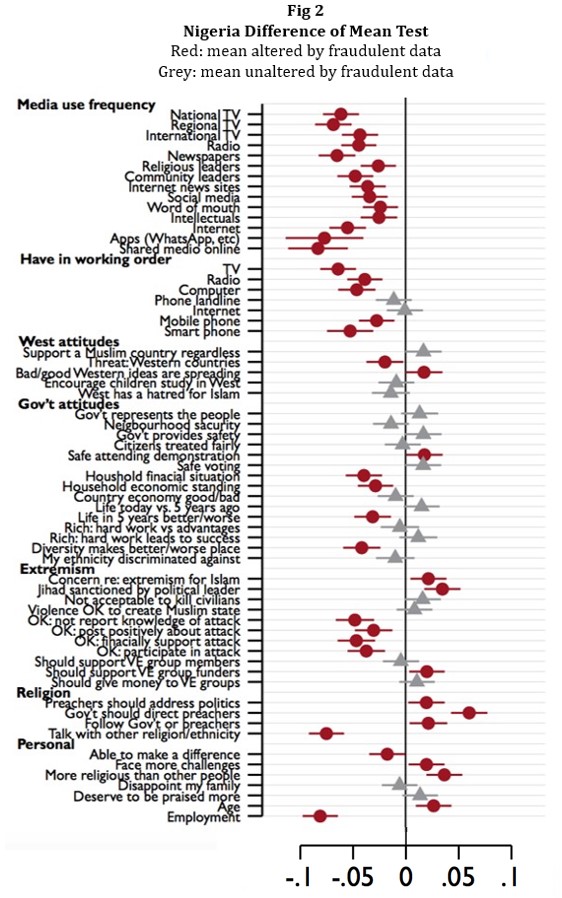

In one example, failing to remove fraudulent interviews in a study in Nigeria significantly altered the mean of 42 of 63 variables (Fig 2 – red points are affected). In other words, tolerating fraud caused two-thirds (67%) of our measured variables to change. Significant differences of means were also found in the other eight countries. These differences were not just confined to one topic, either. Rather, they affected every topic, including reported employment rate, perceptions of threat from the West, and even fundamental demographics like the age of respondents.

Besides influencing the means, ORB found that fabricated data reduced:

1. the level of response variance (41% different),

2. the occurrence of extreme answers,

3. the rate of refusals or “don’t know” answers,

4. the rate of conflicting answers, and

5. the rate of “other” answers.

These inaccuracies resulted in anywhere between 10% to 24% deletion rate of responses.

Conclusion

ORB has found that low-quality data can significantly skew survey results, even with as low as a 2% deletion rate. Political science researchers need to be aware that data fabrication can change the recommendations we draw for our international leaders, local actors, and global conscience.

At the same time, we must be aware of research managers’ responsibilities in managing fraud in the survey. Conducting face-to-face surveys in fragile environments is a complex task and enumerators can get overwhelmed by the sensitive nature of the survey, difficult travel, and shifting security. This means that quality control starts with the research team: researchers must work proactively to help enumerators produce accurate data by providing them with the tools and skills necessary to carry out difficult research. This includes writing effective and culturally cognizant surveys, choosing pertinent and attainable locations, and providing comprehensive training and vetting for enumerators to ensure they have the correct capacities. Rigorous back-end quality control practices also need to be in place to refine the field results.

This peer-to-peer, multi-step approach ensures appropriate quality controls and verifiable conclusions at every phase of the research process. This ensures our conclusions lead us to truly understand key issues in security, development, and peace.

______________________________________

1 Oscar Castorena and others, How Worried Should We Be? The Implications of Fabricated Survey Data for Political Science, International Journal of Public Opinion Research, Volume 35, Issue 2, Summer 2023, edad007, https://doi.org/10.1093/ijpor/edad007